Computers are very versatile beasts. Physicists are tempted to use them to do real-time signal processing and for instance implement a feedback-loop on an instrument. If the frequencies are above 10Hz this is not as easy as one might think (after they run at several gHz). I will try to explore some difficulties here.

Remember, these are just the ramblings of a physics phD student. I have little formal training in IT, so don’t hesitate to correct me if I didn’t get things right.

Operating systems, timing and latencies

If you want to build an I/O system that interacts in real-time with external devices you will want to control the timing of the signals you send to the instruments.

Computers are not good at generating events at a precise timing. This is due to the fact that modern operating systems share the processor time between a large number of tasks. Your process does not control completely the computer, and it has to ask for time to the operating system. The operating system shares time between different processes, but it also has some internal tasks to do (like allocating memory). All these operations may not perform in a predictable time-lapse [2], and make it harder for a process to produce an event (eg a hardware output signal) at a precise instant.

One solution to avoid problems is to run the program with a single task operating-system, like DOS. Even when doing this you have to be careful, as all system operations asked by your program may not return in a controlled amount of time. The good solution is to use a hard real-time operating system, but this forces us to use dedicated system and makes the job much harder as we cannot use standard programming techniques and libraries.

I will attempt to study the limitations of a simple approach, using standard operating systems and programming techniques, to put numbers of the performance one can expect.

Real-time clock interrupt latency

The right tool to control timing under linux is the “real time clock” [3]. It can be used to generate interrupts at a given frequency or instant.

To quote Wikipedia: “in computing, an interrupt is an asynchronous signal from hardware indicating the need for attention or a synchronous event in software indicating the need for a change in execution”. In our case the interrupt is a signal generated by the real time clock that is trapped by a process.

I have ran a few experiments on the computers I have available to test the reliability of timing of these interrupts, that is the time it take to the process to get the interrupt. This is known as “interrupt latency” (for more details see this article), and it limits both the response time and the timing accuracy of a program that does not monopolize the CPU, as it corresponds to the time needed for the OS to hand over control to the program.

The experiment and the results

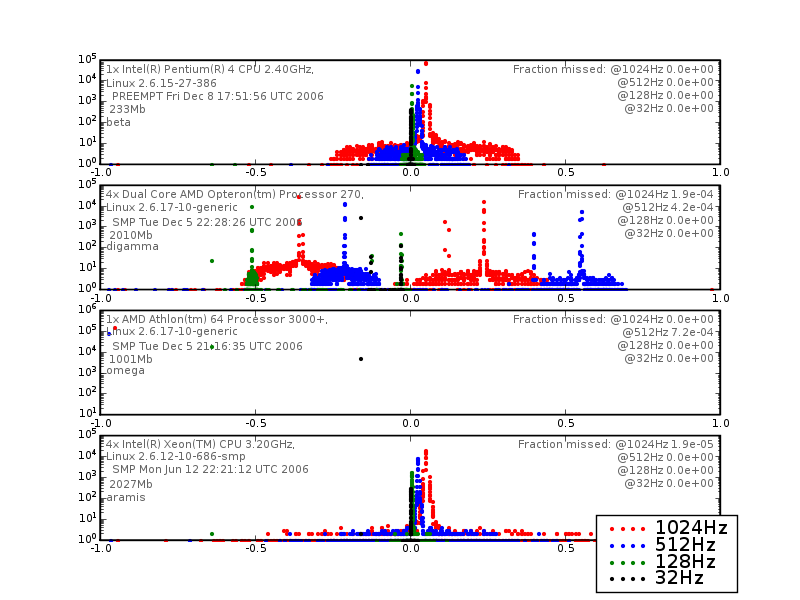

I used a test program to measure interrupt latency [4] on linux. The test code first sets the highest scheduling priority it can, then asks to be waken up at a given frequency f by the real-time clock. It checks the real-time clock to see if it was really waken-up when it asked for. It computes the differences between the measured delay between 2 interrupts and the theoretical one 1/f. Here is a plot of histogram of the delays on different systems. The delay is plotted in units of the period 1/f.

While the code was running I put some stress on the system, pinging google.com, copying data to the disk, and calculating an md5 hash. This is not supposed to be representative of any particular use, I just wanted not the system to be idle aside from my test code. The tests where run under a gnome session but without any user action.

Interpretation of the results

I am no kernel guru, so my interpretations may be imprecise, but I can see that the results are pretty bad.

There is a jitter that can go up to half a period at 1kHz. Depending on how important it is to have a narrow linewidth of your “digital oscillator” the jitter sets a limit to the frequency where the computer can be used as a “digital oscillator”.

This also tells us that an interrupt request takes in average 0.5ms to get through to the program it targets. This allows us to estimate the time it take for an event (for instance generated by an I/O card) to reach a program, if this one is not running.

Keep in mind that this experiment only measures jitter and frequency offset due to software imperfection (kernel: operating system related), on top of this you must add all the I/O bus and buffer problems, if you want to control an external device.

An interesting remark is to see how the results vary from one computer to another. Quite clearly omega’s RTC is not working properly, this is probably due to driver problems. Beta has good results, and this is probably due to its pre-emptible kernel. The results of our computer (digamma) are surprisingly bad. This is powerful 4 CPU computer. It seems to me that the process my be getting relocated from one CPU to another, which generates big jitter. Aramis is a 2 CPU (+ multithreading, that’s why it appears as 4) box, and it performs much better. The CPU are different, and the kernel versions are different, but I would expect more recent kernels to fare better.

The take home message: do not trust computers under the milisecond.

Other sources have indeed confirmed that with a standard linux kernel, at the time of the writing (linux 2.6.18) interrupt latency is of the order of the millisecond. The “RT_PREEMPT” compile switch has been measured to drop the interrupt latency to 50 microseconds, which is of the order of the hardware limit.

Implications of this jitter

These histograms can be seen as frequency spectra of the signal generated by the computer.

We can see that the signal created can be slightly off in frequency (the peak is not always centered on zero). The RTC is not well calibrated. This should not be a major problem if the offset is repeatable, as it can be measured and taken in account for.

We can see that the spectrum has a non negligible width at high frequency. This means that in a servo-loop like system the computer will add high frequency noise at around 1kHz. It also means that the timing of a computer created event cannot be trusted at the millisecond level.

However it is interesting to note that very few events reach out of the +/- 1 period. This means that the computer does not skip a beat very often. It does perform the work in a reliable way, but it does not deliver it on time. This means that if we correct for this jitter the computer can act as a servo loop up to 1kHz. The preempt kernel performs very well in terms of reliability, even though it is on an old box with little computing power.

Dealing with the jitter

First we could try to correct for the jitter with a software trick. For instance we could ask for the interrupt in advance, and block the CPU by doing busy-waiting (to ensure that the scheduler does not schedule us out) until the exact moment comes.

Another option is to use an I/O device with an embedded clock, that corrects for the jitter. For instance a hardware trigged acquisition card. I prefer this solution as it is more versatile and scalable.

This brings us to something that seems to be quite general with real-time computer control: buffers and external clocks. The computer has the processing power to do the work in the required amount of time. The buffer and the external clock correct for the jitter introduced by the software.

Finally recompiling a kernel with the RT-preempt patch would probably help a lot, given that it reduces the interrupt latency by two orders of magnitudes.

Technical details about the experiment

The measuring code

The way this work is that a small C code (borrowed and adapted from Andrew Morton’s “realfeel.c”) asks for the highest scheduling priority it can get, then set the real-time clock to generate an interrupt at a give frequency. It then loops, waiting for the real-time clock (RTC). The OS schedules other tasks during the waiting period, but when the interrupt is generated by the RTC the OS gives the CPU back to the program. It then compares the time delay between the last time it got the interrupt, and this time, and stores the difference. The results are stored in a histogram file.

The stress code

I have very ugly way of putting stress and the computer, so that the kernel actually schedules other tasks. I did not put tremendous stress on the CPU, as I want to simulate standard use cases. This is the way I did it:

for (( i = 0 ; i <= 10; i++ ))

do

ping -c 10 www.google.com &

dd if=/dev/urandom bs=1M count=40 | md5sum - &

dd if=/dev/zero of=/tmp/foo bs=1M count=500

sync

rm /tmp/foo

done

Three tasks running in parallel: pinging google, calculation the md5 hash of a random chunk of bits (which also means generating it), and writing 500Mb to the disk. If the system and the network are fast enough the 2 first task finish before the last one. This is done on purpose.

Making your own measurements

You can reproduce the histograms under linux by running the “stresstest.sh” script given be the following archive . The plots can be obtained by running the “process.py” python scripts (requires scipy and matplotlib). You may have to increase the real-time clock frequency user limit. You can do this by running (as root) “ echo 1024 > /proc/sys/dev/rtc/max-user-freq”

Send me the results dir created by the “stresstest.sh” script on your box, I am very interested to gather more statistics.

Conclusion

The jitter measurement is interesting not because it shows the absolute limit of the technology (hard real-time OSs, like RTlinux could go much further), but because it shows the performance achievable with simple techniques. Looking at this data I would say that anything with frequencies below 10 to 100Hz is fairly easy to achieve with the RTC interrupts, anything around several kiloHertz can be done with a bit more work, and anything above require a lot of work.

My current policy is to try to move to embedded devices anything with speeds above 10Hz.

Acknowledgments

I would like to thank Nicolas George for enlightening discussions on these matters, as useful questions on the purpose of this experiment. I would also like to thank David Cournapeau for pointing me to interesting references and to the Linux Audio Developer mailing list for more information.

References

| [1] | Wikipedia article on real-time computing: http://en.wikipedia.org/wiki/Real-time_computing |

| [2] | A very clear article about fighting latency in the linux kernel: http://lac.zkm.de/2006/papers/lac2006_lee_revell.pdf |

| [3] | About the RTC: http://www.die.net/doc/linux/man/man4/rtc.4.html |

| [4] | What this code is actually measuring is, in technical terms, the interrupt latency, that is the time it takes for the kernel to catch the interrupt, and the rescheduling latency, that is the time it take for the kernel to reschedule from one process to another. |

| [5] | A different benchmark, that probably studies more directly the intrinsic kernel limits than my code: http://lwn.net/Articles/139403/ |

| [6] | Another benchmark, that also benchmarks the RT-preempt patch and shows the impressive improvements achieved with this patch: http://kerneltrap.org/node/5466 |

| [7] | A course on real-time computing, with the lecture notes. http://lamspeople.epfl.ch/decotignie/#InfoTR |