The Scipy 2011 conference in Austin

Last week, I was at the Scipy conference in Austin. It was really great to see old friends, and Austin is such a nice place.

The Scipy conference was held in UT Austin’s conference center, which is a fantastic venue. This is the first geek’s conference I have been at where the wireless network worked flawlessly with a good bandwidth, even thought 200 geeks were pounding on it. As a tutorial presenter, this was incredibly useful.

Conference highlight

Here is a short list of what I felt were the big trends and highlights of the conference. This is obviously biased by my own interests. I am not listing parallel computing, as it is clearly an important area of progress and debates, but it has been the case for the last few years.

Eric Jone’s keynote

Of course Eric’s keynote was excellent. Eric is a great speaker and always has good insights on how to run a team and a project. This year he shared (some) of his tricks in making Enthought deliver on software projects: “What Matters in Scientific Software Projects? 10 Years of Success and Failure Distilled”. The video is not yet online, unfortunately. Grab it when you can.

Hilary Mason’s keynote

Hilary is an applied data geek, just what I like! She gave an interesting keynote on how bitly (an URL-shortening startup, for those living under a rock) mines the requests on the URLs that the serve to do things like ranking or phishing attempts detection. Of course, I couldn’t resist asking what tools they used, thinking that she would reply R. She mentioned that they did do some roll-their-own, but she mentioned mlpy and scikit-learn, with a mention that it was very nice, at which point I believe that I blushed. She stressed that R was hard to use and production and raised the point that most often academic software doesn’t pan out in these settings (I hope that I am not distorting her thoughts too much).

Statistics and learning

I had the feeling that statistics and data mining played a big role at scipy this year. Maybe it is because I am more tuned to these questions nowadays, but some signs do not lie. There was a special session on Python in data sciences, a panel discussion on Python in finance and many many statistics and data related talks, as well as two tutorials and a keynote.

In addition, on a personal basis it was really great to meet part of the team behind scikits.statmodels. We had plenty of very interesting discussions and they really help me understand the way that some statisticians abord data: very differently than me, because they have fairly little data, and can afford to inspect reports and graphs, whereas I rely more on automated decision rules.

IPython

Min gave an excellent tutorial on how to do parallel computing using IPython. These guys have certainly done an excellent job to make cluster-level programming in Python easier. While they don’t play yet terribly well with the restrictive job-queue policy of the clusters to which I have access, they have all the right low-level tools to address these issues and Min told me that they will be working on this next year.

Fernando gave an impressive talk on the new developments of IPython. In particular, the new Qt-based terminal is `really cool`_ and there is a web frontend in the works.

Cluster computing as facility

While I mention cluster computing, I must confess that I have always stayed away from this beast: I find it a time sink, and I find that I get more science done without it. This is why I really like the presentation of the PiCould guys on, … cluster computing! The reason I liked it, is that they start from the principle that your time is more important than CPU time. I hear so much about bigger better faster more high-performance computing when researchers forget to address the biggest issue:

… a whole generation of researchers turned into system administrators by the demands of computing - Dan Reed, VP Microsoft

Abstract code manipulation for numerical computation

Finally, a trend that is picking up in the Python-based scientific computing is the abstract manipulation of expressions to generate fast code. This ranges from JIT (just in time) compilation generating machine code, to rewriting mathematical expressions. Peter Wang had a talk in this alley, but the topic was also brough up be Aron Ahmadia. Of course this is not new: numexpr has been using these tricks for years, and more recently Theano has been making good use of GPUs thanks to them.

Seeing this topic emerges in more and more places fr good reasons: with faster and more numerous CPU, the number of operations a second is less the bottleneck, and the order in which they are applied, or the physical location, is becoming critical.

My own agenda

Sprinting on scikit-learn

We had two days of sprints after the conference. A huge number of people voted for sprint on the scikit-learn but only two people showed up: Minwoo Lee and David Warde-Farley. Thanks heaps to these guys! My priority for the sprint was to review and merge branches. That worked beautifully: we merged in the following features:

- Dirichlet-Process Gaussian mixture models, by Alex Passos

- Sparse PCA by Vlad Niculae.

- Speedups in Gaussian processes by Vincent Schut.

- Sparse implementation of the mini-batch k-means by Peter Prettenhofer.

In addition, David added dataset downloader for the Olivetti face datasets which is lightweight, but rich-enough to give very interesting examples.

My presentation

I gave a talk on my research work, and the software stack that undermines it: Python for brain mining: (neuro)science with state of the art machine learning and data visualization. I think that it was well received by the audience. What is really crazy is that I uploaded the slides on slideshare, and they got a ridiculous amount of viewing. I suspect that it is because of the title: brain mining does sound fancy.

Mayavi

Because of technical and political reasons, I cannot get Mayavi installed on the computers at work. This, and the fact that many people ask for help, but little contribute, even in the form of answers on the mailing list, had been mining me a bit. I got so much great feedback on Mayavi at the conference that I feel much more motivated to invest energy on it.

The Humain Brain Mapping conference in Quebec City

This blog post is getting too long. It is well beyond my own attention span. However scipy is not the only conference to which I have been recently. Two weeks before I was in Quebec, for the Human Brain Mapping conference. As each year, HBM is a fun ride. It has fantastic parties in the evenings. But I didn’t stay up too late as, this year was a busy for me: I was teaching in a educational course, and chairing a symposium, both on comparing brain functional connectivity across subjects.

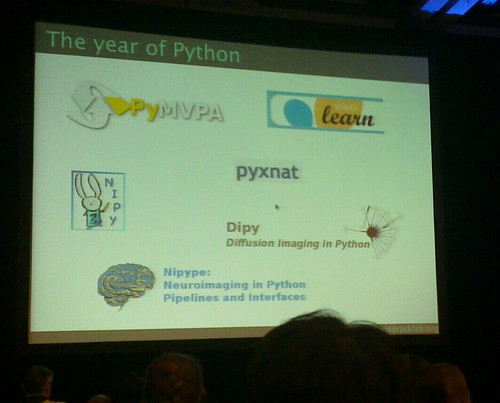

But the really big deal at HBM this year came at the end. As I was dosing off, vaguely listening to Russ Poldrak’s closing comments, he brought up on screen a slide entitled the year of Python. This is a big deal: we’ve been working for years to get Python in the neuroimaging word, and it is clearly making progress, despite all the roadblocks.